Typically you have a bunch of pipelines that are started by one or more triggers. Sometimes, a pipeline needs to be manually triggered. For example, when the finance department is closing the fiscal year, they probably want to run the ETL pipeline a couple of times on-demand, to make sure their latest changes are reflected in the reports. Since you don’t want them to contact you every time to start a pipeline, it might be an idea to give them permission to start the pipeline themselves.

This can obviously be done by tools such as Azure Logic Apps or a Power App, but in my case the users also wanted to view the progress of the pipeline (did something crash? Why is it taking so long? etc.) and developing a Power App with all those features seemed a bit cumbersome to me. Instead, we gave them permission on ADF itself so they can start the pipelines. There’s one problem though, there’s only one role for ADF in Azure, and it’s the contributor role. A bit too much permission, as anyone with that role can change anything in ADF. You don’t want that.

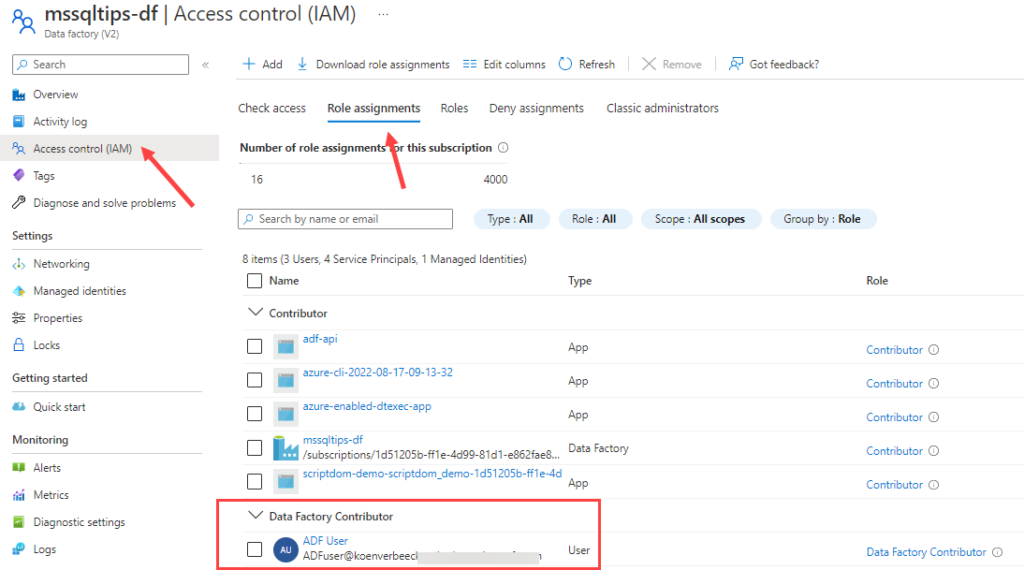

In the screenshot above, the user “ADF User” has been assigned to the Data Factory Contributor role, which means this user can do anything in ADF. The solution is to create a custom role. In the resource group, go to access control and add a new custom role.

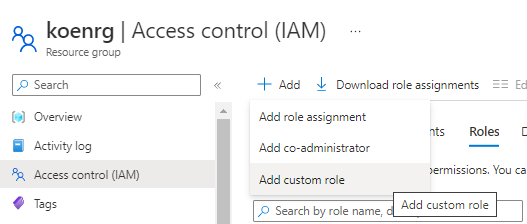

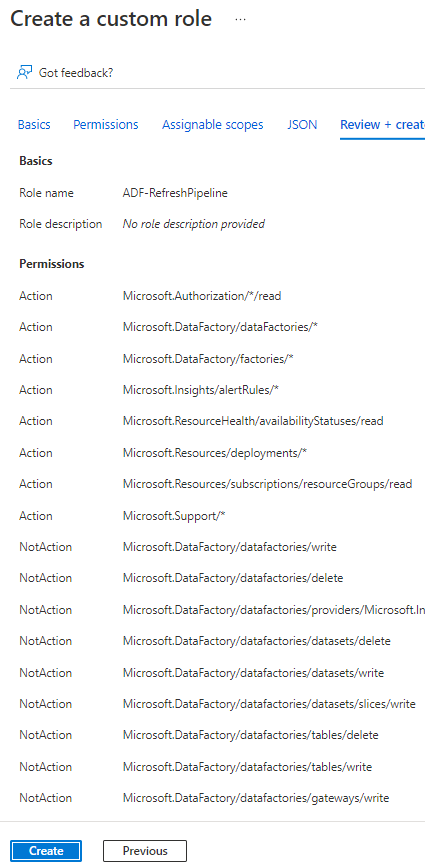

Give the new role a name and optionally a description. To get started with the permissions, we clone the existing Data Factory Contributor role.

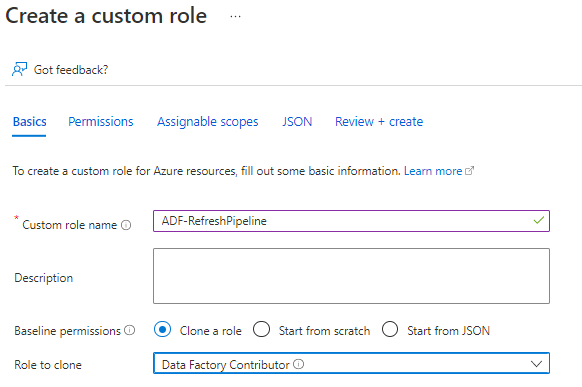

In the next screen, you’ll see all the permissions this role has access to.

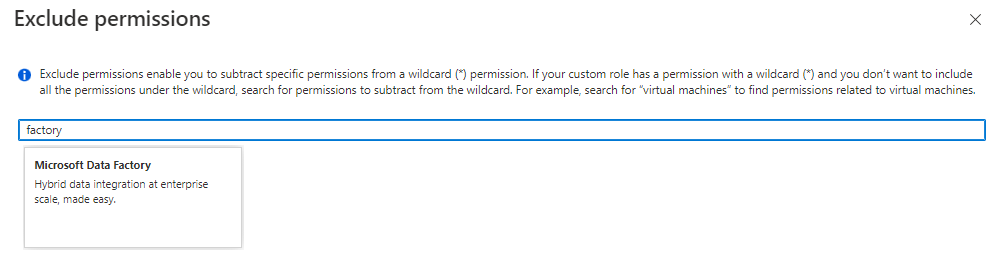

Highlighted are the two permissions sets for Data Factory. As you can see, they both have a wildcard, which means all permissions are granted. Click on Exclude permissions. This allows us to add permissions to the list which will restrict the access. Search for the Microsoft Data Factory permissions.

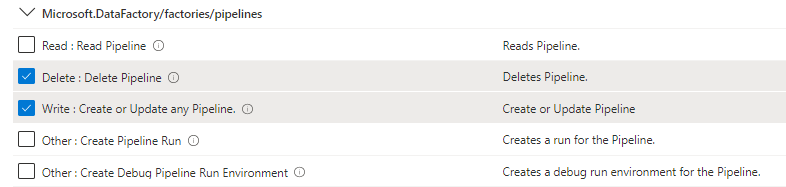

You’ll get a huge list of permissions. I selected everything that had to do with updating, creating or deleting, with the exception of creating pipeline (debug) runs.

All of those deny permissions (NotAction) are now added to the list:

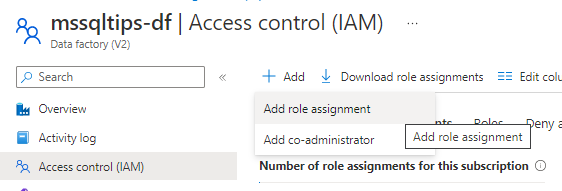

Go back to your Data Factory instance and to the access control. Add a new role assignment (and in my case I deleted the role assignment of Data Factory Contributor).

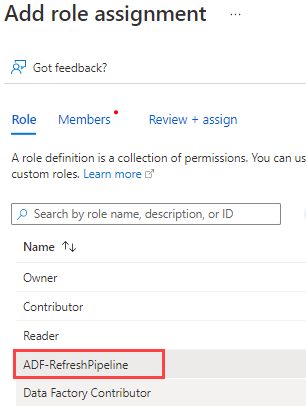

Choose your newly created custom role.

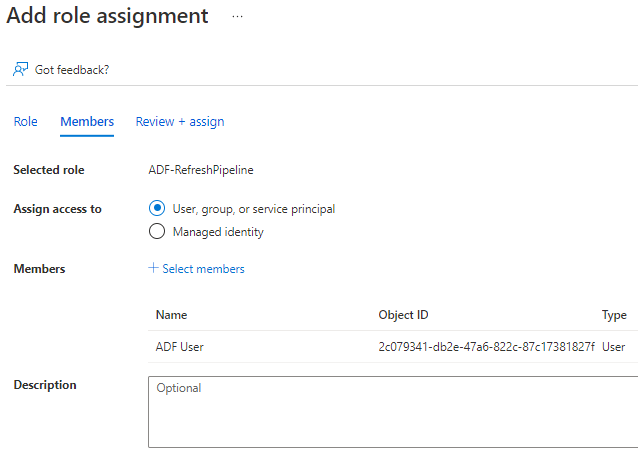

Select the users you want to add to this role.

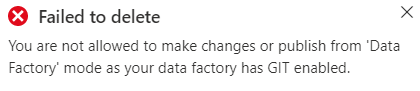

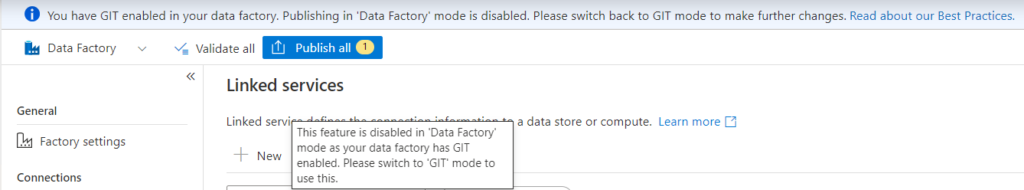

When the user now logs in into Data Factory Studio, some features will stop working. For example, objects cannot be deleted, changed or created:

There’s a (confusing) message saying Git is enabled and that’s why changes cannot be made. But in reality, it’s not because of Git but because of the RBAC role.

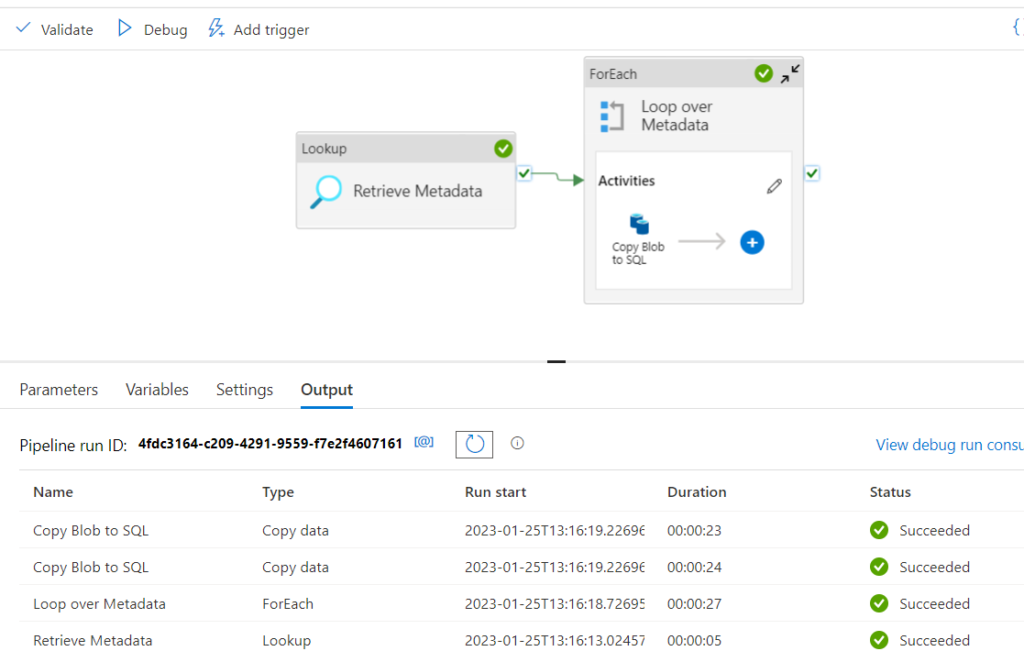

The user can however debug a pipeline:

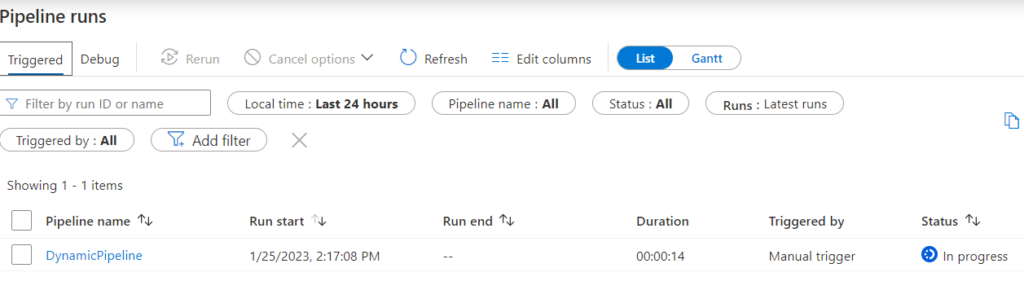

Or the user can trigger an existing pipeline:

It’s also possible to rerun pipelines, or cancel them.

------------------------------------------------

Do you like this blog post? You can thank me by buying me a beer 🙂

But how to restrict user to trigger or debug only a particular pipeline?

As far as I know, this is not possible directly in ADF. It’s the same in SSIS. You can give someone in the SSIS catalog permission to execute packages, but not only a single package. It’s all or nothing. If you want to give someone permission to a single pipeline, I would use a Logic App, an Azure Function or a Power App to do this. You give them permission to execute that object (whatever you use), and that will trigger the pipeline.

Many of our clients don’t want business users accessing the ADF Resource in the Azure Portal at all, regardless of permissions. So we’ve created, an Add-On to Azure Data Factory called ChillETL. ChillETL not only makes it easy to create multiple schedules but it also allows business users to trigger these schedules by simply clicking on a link. This process can easily be customized to limit those users to certain schedules based on their Azure Diretory Login.

Hi Noel,

‘it also allows business users to trigger these schedules by simply clicking on a link’ is exactly what I’m looking for. I’d be very happy if you could share how you did that.

You can contact me for a demo of ChillETL. My email is noel@clouddata.solutions

If we need to give this permission to APIM backend, what should be the URL of the pipeline and should it need app registration in Entra Id for ADF as resource or auth can be managed by managed identity?

I could find this URL but it requires oauth2

POST https://management.azure.com/subscriptions/{subscriptionId}/resourceGroups/{resourceGroupName}/providers/Microsoft.DataFactory/factories/{factoryName}/pipelines/{pipelineName}/createRun?api-version=2018-06-01