SQLKover update: When I read this old blog post of mine, all I can think about is: Power Query to the rescue!

When it comes to importing data from an Excel sheet with SSIS, Excel has quite a reputation. And not a terribly good one. Well deserved, to be honest, because numerous issues can rise when dealing with this piece of software. This blog post will deal with the issue I encounter the most on forums, which is the issue of the mixed data types in one column, also known as “Why is some of my data read as NULL?”.

There are already a numerous blog posts, articles and forum posts written on this subject, but most of them only deal with parts of the issue. I would like a single, comprehensive reference I can point to in the forums, hence this blog post. And because, you know, world domination has to start somewhere…

The issue

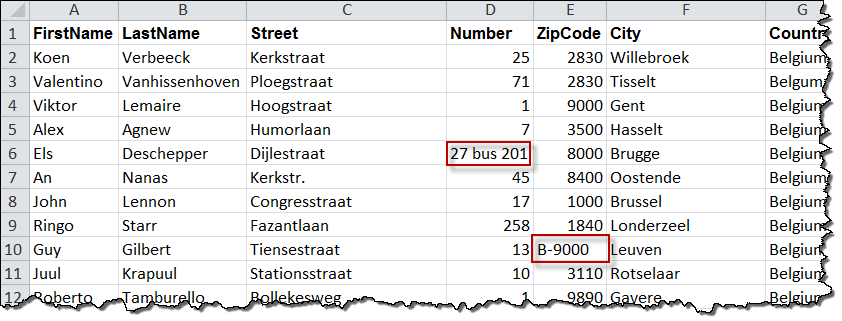

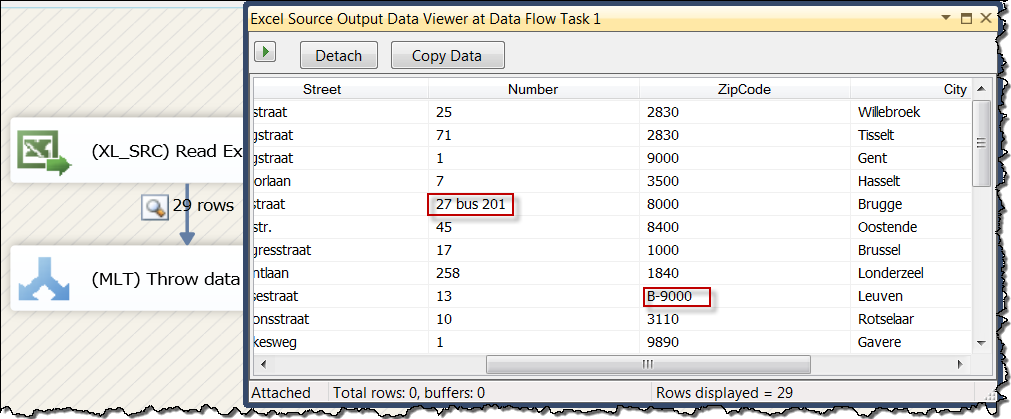

You have an Excel sheet with data that you would like to import with SSIS. One of the columns has both text data as numeric data. Take this sample as an example:

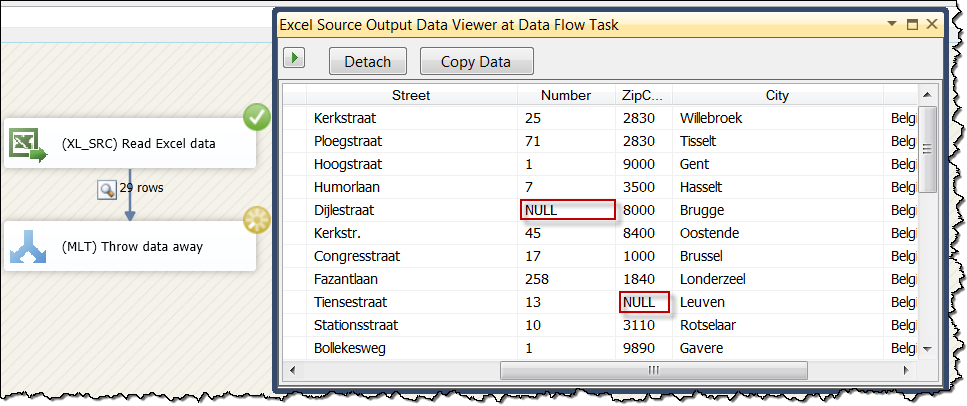

The postal code in Belgium usually consists of 4 digits. However, sometimes it is prefixed with “B-“, which makes the data alphanumeric. The house number column has a value containing the word “bus”, so it becomes alphanumeric as well. When we pull this data into the SSIS dataflow, we get the following:

All the alphanumeric data is read as NULL, while the numeric values come in untouched. The problem is not with SSIS, but with the JET OLE DB provider – used to read Excel 2003 or older – or with the ACE OLE DB provider, which is used to read Excel 2007 or newer. Both providers sample the data in a column to determine the data type. The data type with the most occurrences in the sample wins and is selected as the source data type in SSIS. In our sample, the resulting data type is numeric. Since the alphanumeric postal codes cannot be converted to a numeric value, those values are replaced with NULL. Not exactly what we want when extracting data for our ETL process.

The solution

The answer to the issue is to add IMEX=1 to the extended properties of the connection string. This tells the provider if intermixed data types are found, the data type specified in the ImportMixedTypes registry setting is taken, which has the default of text. (You can find a list of all the registry paths at the bottom of this blog post). A typical connection string would look like this:

Provider=Microsoft.Jet.OLEDB.4.0; Data Source=C:MyExcel.xls;Extended Properties=”Excel 8.0;HDR=Yes;IMEX=1″;

You can find more about connection strings at www.connectionstrings.com. In SSIS, you can only modify the Excel connection string manually in the properties window of the Excel connection manager. It cannot be changed through the GUI by double clicking the connection manager. It is possible that you get a warning at the source component, telling you the metadata of some columns have changed. This is normal, as our offending columns have now changed to a string data type.

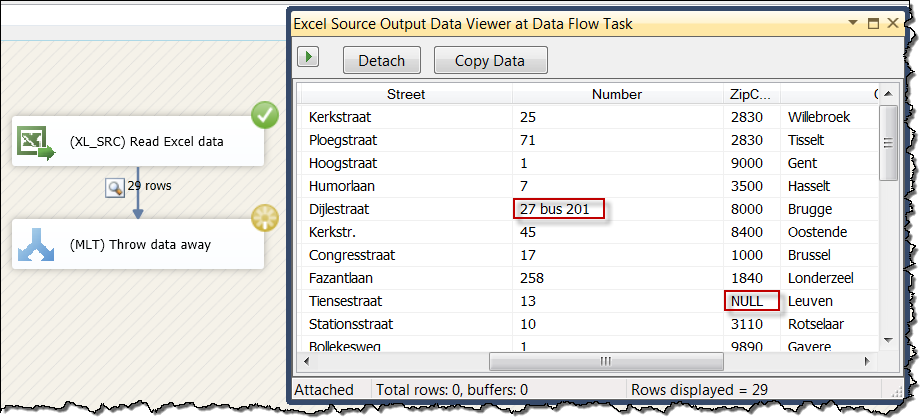

After adding IMEX=1, we get this result:

That looks a little bit better, doesn’t it?

However…

The NULL in the house number column disappeared and made place for the actual value, but the NULL in the zip code column is still present. What did just happen here? Remember when I said earlier that the providers sample the data to determine the data type? The JET and ACE OLE DB provider take the first x number of rows, where x is the number specified in the TypeGuessRows registry setting, which has the default of 8. If the first occurrence of intermixed data types happens after this sample, the providers take the data type found in the sample. Again, big trouble for us as we’re still losing data.

The solution part II

Luckily the solution is fairly simple: set either the TypeGuessRows registry setting to a higher number (a maximum of 16) or set it to 0. A very popular myth is that setting it to 0 causes the providers to scan all data. However, this is not true as indicated by this KB article. Only the first 16384 rows are scanned, which is in my opinion a pretty high number. Be aware that a performance hit might be possible with larger Excel workbooks as the provider needs to scan more data.

Make sure you change the registry property in the right version of the providers. The ACE OLE DB provider used in Office 2010 is version 14.0, but the SSIS connection string mentions version 12.0. A big thanks to Valentino (blog | twitter) who pointed me at this problem. You can find more information in his article (look at the bottom for the important update).

After updating the registry we finally get all our values:

But what if…

What if the first occurrence of intermixed data types happens after row number 16384? (You just don’t have any luck, don’t you?) Or what if the administrator doesn’t allow you to modify the registry? (Always the same with those paranoia people) If you can control the template of the Excel, you can do the following: add a dummy row at the beginning of the sheet, which has alphanumeric data for the violating columns. This way you’ll be sure string data is always included in the sample and that the resulting data type is always alphanumeric. Hide the dummy row in the template and get rid of it in the SSIS dataflow using a conditional split.

Another alternative you could try is to switch the cell formatting from General to Text, if possible.

But what if you can’t control the template? Sometimes the Excel files are automatically generated or supplied by a third party. Suck it up and try convincing the supplier of the Excel files to use a file type that is actually intended to store flat file data, such as .csv files or ragged right flat files, instead of bug-inducing spreadsheets. Believe me; it will make your life easier.

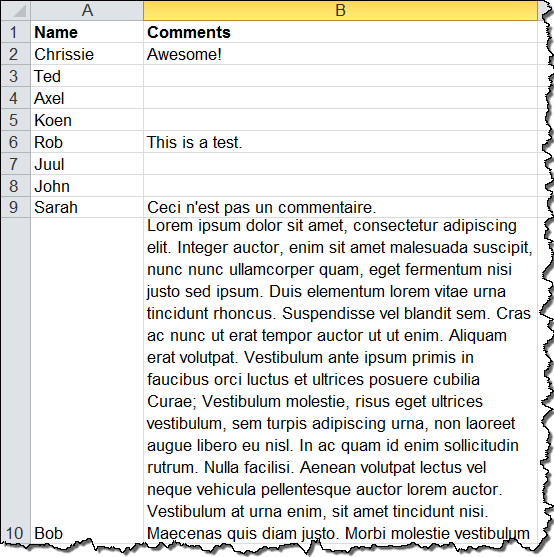

The truncated text problem

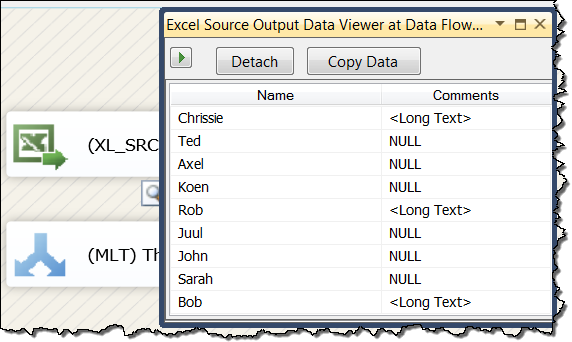

A very similar issue is when you have a text column in your Excel sheets that sometimes has more than 255 characters in a single cell. A common example is a “comments” column. When you try to import this, you can get this error in SSIS:

[Excel Source [5]] Error: There was an error with Excel Source.Outputs[Excel Source Output].Columns[Comments] on Excel Source.Outputs[Excel Source Output]. The column status returned was: “Text was truncated or one or more characters had no match in the target code page.”.

The solution part III

The problem is the same as before: the provider scans the first 8 rows and finds only text of normal length. SSIS takes the default data type of (DT_WSTR,255) for all string data. Our comments are sometimes larger than 255 characters, causing the truncation error.

If your comments have a maximum length lower than the 4000 character limit of DT_WSTR, you can simply adjust the length of the column in the advanced editor of the source component or you can configure the source to ignore the failure. The last option does give you truncated data though. This blog post by Bradley Schacht (blog | twitter) describes how you can make those changes.

If the length exceeds 4000 characters, we have a totally different problem, since it doesn’t fit into the DT_WSTR data type. We need the DT_NTEXT data type – which is a LOB data type – to store the data into the pipeline. This corresponds with a text or nvarchar(max) data type in SQL Server. The solution is the same as before: adjust the TypeGuessRows registry setting so the provider will pick up those lengthy comments and determine the correct data type to store the values.

The values are shown as <Long Text> as the data viewer can’t show LOB data.

Sometimes SSIS and Excel stubbornly refuse to play nice together and won’t adjust the column data type. In that case you’ll have to adjust the data type yourself in the advanced editor of the Excel source.

Conclusion

This blog post deals with one of the most common issue found when working with SSIS and Excel: the determination of the source data types by the JET and ACE OLE DB providers. Most issues can easily be solved by adjusting some registry settings. Follow these easy steps to import your Excel data with the least amount of issues:

- Add IMEX=1 to the connection string.

- Set the TypeGuessRows registry setting to 0.

- Double check the data type in the advanced editor of the source component.

Addendum: the registry settings

|

Provider |

Values |

Path |

|

JET OLE DB |

TypeGuessRows |

HKEY_LOCAL_MACHINE\Software\Microsoft\Jet4.0\Engines\Excel |

|

ACE OLE DB |

TypeGuessRows |

HKEY_LOCAL_MACHINE\Software\Microsoft\Office12.0\Access Connectivity Engine\Engines\Excel (*) |

(*) For newer versions of Office, you need to replace 12.0 with the correct version number. It took me only 1 hour of debugging to figure that one out.

Note: in 64-bit systems, the values for the 32-bit providers should be located in the Wow6432Node.

------------------------------------------------

Do you like this blog post? You can thank me by buying me a beer 🙂

Thanks Koen for posting this comprehensive list of things to take care of while importing excel data with SSIS.

Koen, been meaning to put this together on my own blog for some time – I’ll link to your comprehensive article instead. Many thanks.

One thing I would add is that if you are in a situation where changing registry settings is difficult or you don’t want to have to bother with changing settings every time you move machines/re-image machines i.e. you have to stick to the default of 8 rows being sampled. Then just add 8 dummy rows to the beginning of your Excel tables with the relevant type of data inserted – bunch of ‘A’s for strings, ‘9’s for numeric etc. – and then delete all the dummy records out with conditional split as mentioned. With this setup you can be certain the SSIS routine will work on any machine with default JET settings.

Hi James,

thanks for your comment. The dummy rows workaround is certainly a valid one. I see it popping up in forums all the time.

My opinion is that Power Query will probably make all of this a lot easier.

Cheers,

Koen

What about large numbers? so If i have a number, say 847522986, then excel translates that to 874x000e3 or whatever. And converting back to number, changes the number. The IMEX=1 does not assist with this problem. There’s got to be a solution.

Hi Cody,

which version of SSIS and Excel are you using? Which provider are you using?

I just tested it with SSIS 2016, Excel 2016 and the ACE OLEDB 12.0 provider.

I could read very large numbers just fine, even though they were formatted as scientific notation.

What format do the cells have in Excel?

I remember I had the same issue a very long time ago, with SSIS 2005. You can find a relevant thread over here:

http://www.sqlservercentral.com/Forums/Topic1082519-148-1.aspx

Below Link is very useful if alphanumeric Goes null into database while exporting Excel file into database

https://stackoverflow.com/questions/10102149/what-is-imex-within-oledb-connection-strings/34247426#34247426