I’m using a clustered columnstore index (CCI) on one of my fact tables at a client. The row size is between 8 and 9 million rows and I was wondering how I could load data efficiently in this CCI using SSIS.

I stumbled upon this MSDN blog post: SQL Server 2016 SSIS Data Flow Buffer Auto Sizing capability benefits data loading on Clustered Columnstore tables (catchy title). It explains how you can set the buffer properties of the data flow to try to insert data directly into compressed row groups instead of in the delta store. They fail to achieve this using SSIS 2014 and then they explain how using the new AutoAdjustBufferSize property of SSIS 2016 works miracles and everything is loaded directly into compressed row groups. Hint: you want to avoid loading data into the delta store, as it is row storage and you need to wait for the tuple mover to load the data to the CCI in the background.

However, it’s still possible to achieve the same using SSIS 2014 (or earlier). Niko Neugebauer (blog | twitter) shows this in his post Clustered Columnstore Indexes – part 51 (“SSIS, DataFlow & Max Buffer Memory”). It still depends on the estimated row size, but using these settings you should get better results:

- Set the DefaultBufferMaxRows property of the data flow to 1,048,576, which is the maximum number of rows for a columnstore row group.

- Set the DefaultBufferSize to 100MB (104857600), which is the maximum. If (estimated row size * max number of rows) is bigger than 100MB, you’ll end up with less rows in the rows group.

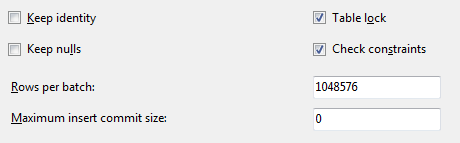

Set these settings in the OLE DB Destination:

- Fast Load, obviously

- Rows Per Batch: 1,048,576. This will set the batch size property of the INSERT BULK command.

- Maximum Insert Commit Size: 0. Although the default is around 2 billion, it can still force intermediate commits. In my case, I had a commit after 150,000 rows, so I ended up with row group sizes of 150,000 rows. They were directly compressed, but a tad too small. Setting this property to 0 will force a single commit at the end, so you finally get your data loaded directly into the CCI with a row group size of 1,048,576.

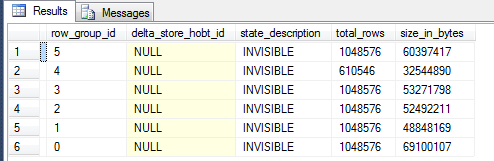

While the SSIS package was running, you could see the different row groups who were not yet committed:

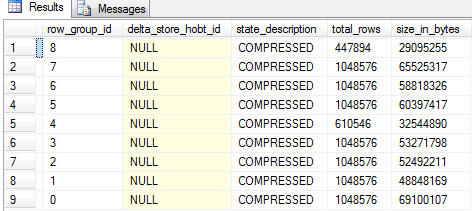

For some reason one row group doesn’t want to play ball like the others. When the SSIS package was finished, all of the row groups were set to the Compressed state:

The package was still quite slow, but this was caused by the fact I enforced a unique constraint on my CCI by using a materialized view (you have to wait till SQL Server 2016 to be able to put a rowstore index on top of a table with a CCI).

Fortunately I have put an incremental load mechanism into place, so I don’t need to load 8 million rows every time.

------------------------------------------------

Do you like this blog post? You can thank me by buying me a beer 🙂