At a data warehouse project I’m using a couple of Logic Apps to do some lightweight data movements. For example: reading a SharePoint list and dumping the contents into a SQL Server table. Or reading CSV files from a OneDrive directory and putting them in Blob storage. Some of those things can be done in Azure Data Factory as well, but it’s easier and cheaper to do them with Logic apps.

Logic Apps are essentially JSON code behind the scenes, so they should be included into the source control system of your choice (for the remainder of the blog post we’re going to assume this is git). There are a couple of options to get your Logic Apps into a git repo:

- Create a project in Visual Studio using the Azure Logic Apps Tools for Visual Studio 2019 extension. Since it’s in Visual Studio, you can directly put everything in git. However, the extension is not available for VS 2022 (and at the time of writing, there are no plans to create one). Since I was not in the mood for installing another version of VS on my machine, this option didn’t work for me. If you’re using an older version of VS, it might work for you and you can stop reading this blog post :). More info can be found here.

- There’s an extension to work with Logic Apps in Visual Studio Code. In VS Code, you connect with your Azure subscription and you’ll get a list of all your Logic Apps. You can edit them, but it’s a live connection to Azure so no code is put on your machine. However, you can right-click a Logic App and choose Add to Project. This will add a .definition.json file and a parameters.json file to a project in VS Code, which you can then check into git. This might work just fine if you don’t have a lot of Logic Apps, but manually adding all Logic Apps might be cumbersome.

- The final option is to use PowerShell, which is the topic of this blog post. It does basically the same as the previous step, but automated.

Danish PowerShell wizard Mötz Jensen has just released a PowerShell module which can assist us with this task: PSLogicAppExtractor. You can find the open-source code in this Github repo. The first thing to do is install the module on your machine:

Import-Module PsLogicAppExtractor

You also need to make sure you have either Azure PowerShell or the Azure CLI installed as well. For some reason, I couldn’t log into my Azure tenant with Azure PowerShell (it gave some weird error after I ran Connect-AzureRmAccount, probably because of the version of PowerShell I was using, which is PowerShell 7). So I used the Azure CLI which worked just fine.

The main idea is that you create a runbook (you can find an example in Github) which contains one or more “tasks”. These tasks will tell PowerShell what to do exactly with the Logic Apps. Mötz helped me with creating a runbook to extract one single Logic App:

# Object to store the needed parameters for when running the export

Properties {

$SubscriptionId = "mysubscriptionguid"

$ResourceGroup = "myRG"

$Name = ""

$ApiVersion = "2019-05-01"

}

# Used to import the needed classes into the powershell session, to help with the export of the Logic App

."$(Get-PSFConfigValue -FullName PsLogicAppExtractor.ModulePath.Classes)\PsLogicAppExtractor.class.ps1"

# Path variable for all the tasks that is available from the PsLogicAppExtractor module

$pathTasks = $(Get-PSFConfigValue -FullName PsLogicAppExtractor.ModulePath.Tasks)

# Include all the tasks that is available from the PsLogicAppExtractor module

Include "$pathTasks\All\All.task.ps1"

# Array to hold all tasks for the default task

$listTasks = @()

# Building the list of tasks for the default task

#Pick ONE of these two

$listTasks += "Export-LogicApp.AzCli"

#$listTasks += "Export-LogicApp.AzAccount"

$listTasks += "ConvertTo-Raw"

$listTasks += "Set-Raw.ApiVersion"

$listTasks += "ConvertTo-Arm"

$listTasks += "Set-Arm.Connections.ManagedApis.IdFormatted"

$listTasks += "Set-Arm.Connections.ManagedApis.AsParameter"

$listTasks += "Set-Arm.LogicApp.Name.AsParameter"

$listTasks += "Set-Arm.LogicApp.Parm.AsParameter"

# Default tasks, the via the dependencies will run all tasks

Task -Name "default" -Depends $listTasks

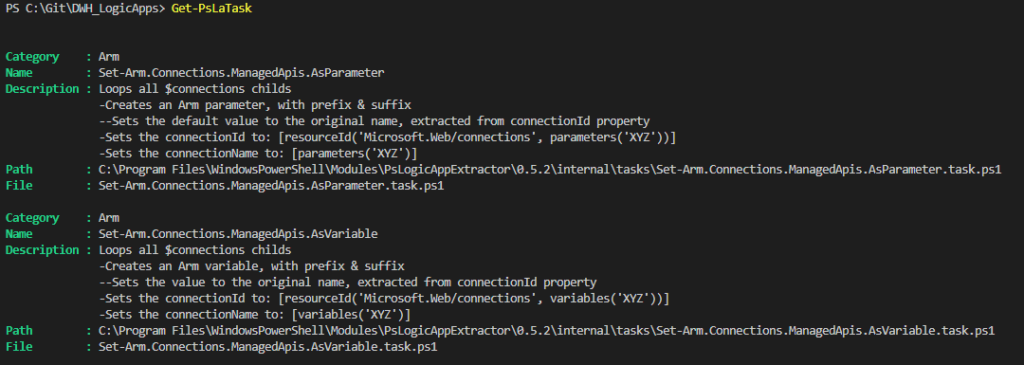

There are a couple of tasks specified. For the first task, you have to choose if you’re using the PowerShell or the CLI version. It will extract the Logic App definition, convert it to a raw format and then to an ARM template. It will then create a parameter for the location (the default is the same location as the resource group) and add all connections as parameters as well. I added an extra task to include the name of the Logic App as a parameter as well. The final tasks will add user-defined parameters to the ARM template as well. You can find all available tasks with a short description if you run the command Get-PsLaTask.

Before you can run this runbook, you’ll need to log into Azure (e.g. with the command az login if you’re using the CLI). Then you can invoke the runbook like this:

Invoke-PsLaExtractor -Runbook "pathtotherunbook" `

-Name "MyLogicAppName" `

-OutputPath "somefolder" `

-ResourceGroup "myRG"

This will output the Logic App into a .json file. If you want, you can also extract the parameters into a parameter file with the following command:

Get-PsLaArmParameter -Path "pathtojsonfile" -AsFile

You need to point this command to the JSON file that was created in the previous step. The result will be a file with the same name as your Logic App and the extension parameters.json. For example:

If you output to your git repo, you can then check the files in. Now, extracting one Logic App at the time is not that particularly useful, so we’re going to use a wrapper around the runbook that will extract all Logic Apps from a given resource group. The script is like this:

#retrieve all logic apps names from Azure

$logicapps=$(az resource list `

--subscription "subscriptionname" `

--resource-group "myRG" `

--resource-type "Microsoft.Logic/workflows" `

--query [].name `

--output tsv)

#find the root folder from the path in which this script is located

$RootPath = (Split-Path $PSScriptRoot -Parent)

#in the same PowerShell folder, there should be a runbook script that parses the logic app definitions into an ARM file

$runbookpath = $RootPath + "\PowerShell\runbook.psakefile.ps1"

foreach ($la in $logicapps){

#Write-Host $la

Invoke-PsLaExtractor -Runbook $runbookpath `

-Name $la `

-OutputPath $RootPath `

-ResourceGroup "dwh-dev"

#save the logic app ARM file into the root folder

$armpath = $RootPath + "\" + $la + ".json"

#extract the parameters from the logic app ARM file and save it into a separate parameter file

Get-PsLaArmParameter -Path $armpath -AsFile

}

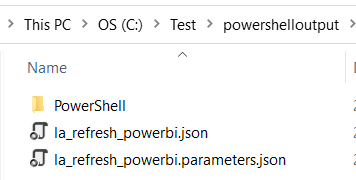

This script assumes the script itself and the runbook are stored in a folder called “PowerShell”. For example:

Using Azure CLI, all of the names of the Logic Apps are retrieved from the resource group. For each Logic App found, we’re executing the runbook and creating a parameter file at the same time. The parameter file in itself is not useful for just checking in your code into git, but it can be useful when you want to deploy the Logic Apps to another environment, which is the topic of a future blog post.

There are a couple of limitations you should be aware of:

- you need to use PowerShell 7 to work with this module. This means you cannot use the PowerShell ISE that comes along with Windows (or I did something wrong, who know?). You can use Visual Studio Code though.

- at the time of writing, custom connectors are not yet supported. Keep an eye on future updates of the module though.

------------------------------------------------

Do you like this blog post? You can thank me by buying me a beer 🙂